According to a recent Tessian survey, 74% of IT leaders think deepfakes are a threat to their organizations’ and their employees’ security*. Are they right to be worried? We take a look.

What is a deepfake?

-

What is a deepfake?

A “deepfake” is a fraudulent piece of content (videos or audio recordings) that has been manipulated or created using artificial intelligence.

Deepfakes are highly convincing— and successfully track people into believing that a person did or said something that never happened. Most people associate deepfakes with misinformation—and the use of deepfakes to imitate leaders or celebrities could present a major risk to people’s reputations and to political stability.

Deepfake tech is still young, and not yet sophisticated enough to deceive the public at scale. But some reasonably deepfake clips of Barack Obama and Mark Zuckerberg have provided a glimpse of what the technology is capable of. But deepfakes are also an emerging cybersecurity concern and businesses increasingly will need to defend against them as the technology improves.

Here’s why security leaders are taking steps to protect their companies against deepfakes.

How could deepfakes compromise security?

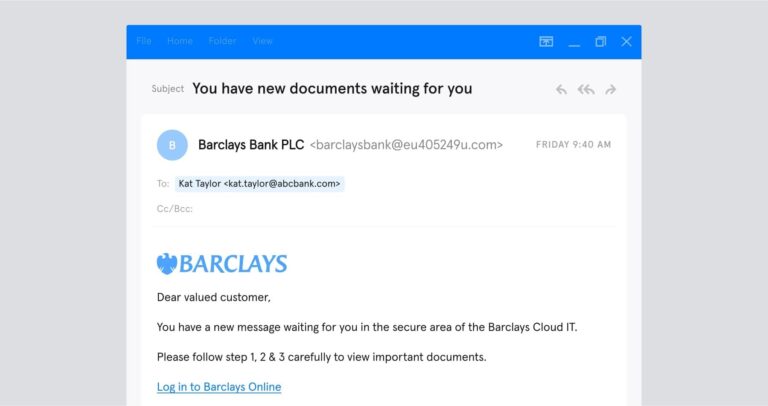

Cybercriminals can use deepfakes in social engineering attacks to trick their targets into providing personal information, account credentials, or money. Social engineering attacks, such as phishing, have always relied on impersonation—some of the most effective types involve pretending to be a trusted corporation (business email compromise), a company’s supplier (vendor email compromise), or the target’s boss (CEO fraud).

Typically, this impersonation takes place via email. But with deepfakes, bad actors can leverage multiple channels. Imagine your boss emails you to make an urgent wire transfer. It seems like an odd request for her to make but, just as you’re reading the email, your phone rings. You pick it up and hear a voice that sounds exactly like your bosses, confirming the validity of the email and asking you to transfer the funds ASAP. What would you do?

The bottom line is: Deepfake generation adds new ways to impersonate specific people and leverage employees’ trust.

Examples of deepfakes

The first known deepfake attack occurred in March 2019 and was revealed by insurance company Euler Hermes (which covered the cost of the incident). The scam started when the CEO of a U.K. energy firm got a call from his boss, the head of the firm’s German parent company—or rather, someone the CEO thought was his boss.

According to Euler Hermes, the U.K.-based CEO heard his boss’s voice—which had exactly the right tone, intonation, and subtle German accent—asking him to transfer $243,000, supposedly into the account of a Hungarian supplier.

The energy firm’s CEO did as he was asked—only to learn later that he had been tricked. Fraud experts at the insurance firm believe this was an example of an AI-driven deepfake phishing attack. And in July 2020, Motherboard reported a failed deepfake phishing attempt targeting a tech firm. Even more concerning—an April 2021 report from Recorded Future found evidence that malicious actors are increasingly looking to leverage deepfake technology to use in cybercrime.

The report shows how users of certain dark web forums, plus communities on platforms like Discord and Telegram, are discussing how to use deepfakes to carry out social engineering, fraud, and blackmail. Consultancy Technologent has also warned that new patterns of remote working are putting employees at an even greater risk of falling victim to deepfake phishing—and reported three such cases among its clients in 2020.

But is deepfake technology really that convincing?

Deepfake technology is improving rapidly.

In her book Deepfakes: The Coming Infopocalypse, security advisor Nina Schick describes how recent innovations have substantially reduced the amount of time and data required to generate a convincing fake audio or video clip via AI. According to her, “this is not an emerging threat. This threat is here. Now”. Perhaps more worryingly—deepfakes are also becoming much easier to make.

Deepfake expert Henry Ajder notes that the technology is becoming “increasingly democratized” thanks to “intuitive interfaces and off-device processing that require no special skills or computing power.” And last year, Philip Tully from security firm FireEye warned that non-experts could already use AI tools to manipulate audio and video content. Tully claimed that businesses were experiencing the “calm before the storm”—the “storm” being an oncoming wave of deepfake-driven fraud and cyberattacks.

“76% of U.S. IT leaders believe deepfakes will be used as part of disinformation campaigns in the election.”

How could deepfakes compromise election security?

There’s been a lot of talk about how deepfakes could be used to compromise the security of the 2020 U.S. presidential election. In fact, an overwhelming 76% of IT leaders believe deepfakes will be used as part of disinformation campaigns in the election*.

Fake messages about polling site disruptions, opening hours, and voting methods could affect turnout or prevent groups of people from voting. Worse still, disinformation and deepfake campaigns -whereby criminals swap out the messages delivered by trusted voices like government officials or journalists – threaten to cause even more chaos and confusion among voters.

Elvis Chan, a Supervisory Special Agent assigned to the FBI told us that people are right to be concerned.

“Deepfakes may be able to elicit a range of responses which can compromise election security,” he said. “On one end of the spectrum, deepfakes may erode the American public’s confidence in election integrity. On the other end of the spectrum, deepfakes may promote violence or suppress turnout at polling locations,” he said.

So, how can you spot a deepfake and how can you protect your people from them?

How to protect yourself and your organization from deepfakes

AI-driven technology is likely to be the best way to detect deepfakes in the future. Machine learning techniques already excel at detecting phishing via email because of how they can detect tiny irregularities and anomalies that humans can’t spot.

But for now, here are some of the best ways to help ensure you’re prepared for deepfake attacks:

- Ensure employees are aware of all potential security threats, including the possibility of deepfakes. Tessian research* suggests that 61% of IT leaders are already training their teams about deepfakes, with a further 27% planning to do so.

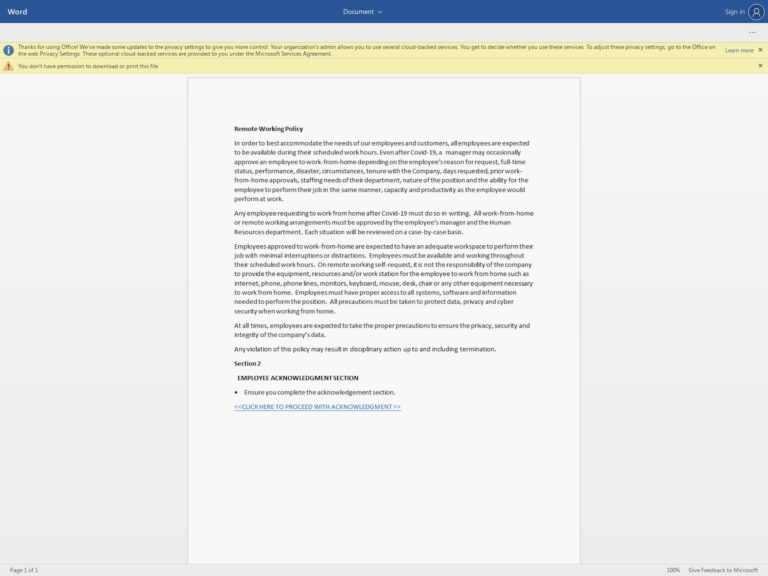

- Create a system whereby employees can verify calls via another medium, such as email. Verification is a good way to defend against conventional vishing (phone phishing) attacks, as well as deepfakes.

- Maintain a robust security policy—so that everyone on your team knows what to do if they have a concern.